Visualizing: What We Don’t Know (Pt. I)

The Written Record

When oral histories are collected for the Veterans History Project, a few written questions are also answered. Once compiled, these written answers form a useful index to the archive.

The Veterans History Project’s search function works by referring to this index. It quickly sorts the archive by gender, by conflict, by branch, and so forth.

As you’ve seen in previous posts, this same information can also lend itself to deeper analysis of the archive.

A Question of Structures

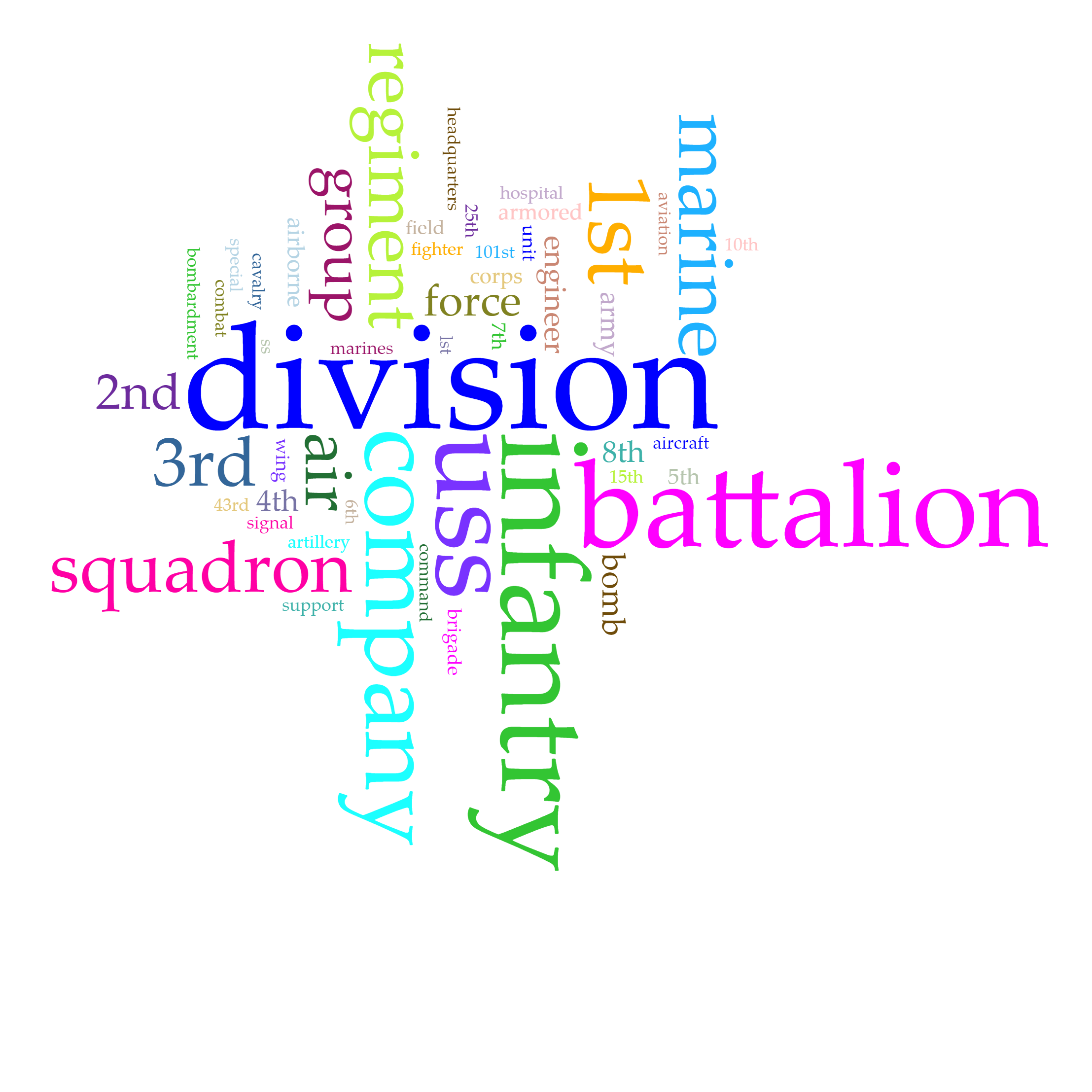

Unfortunately, some aspects of the index are less usable than others. Almost every veteran recorded the unit or ships of their service. However, the formatting of their answers was not standardized, leading to answers of varying precision and style.

Some veterans offered their battalion or division information only, while others meticulously listed everything from company to corps. Still others listed the same information, but in reverse order!

The equivalent occurred with air force, marine, and naval structures. Some recorded only a fleet name, while others listed dozens of individual ships.

This wide variety of responses means that while the data can be searched, we cannot guarantee reliable results. We also can’t analyze this data the same way we examined gender and age.

Where in the World?

A similar problem occurred when interviewers offered veterans two confusingly similar fields:

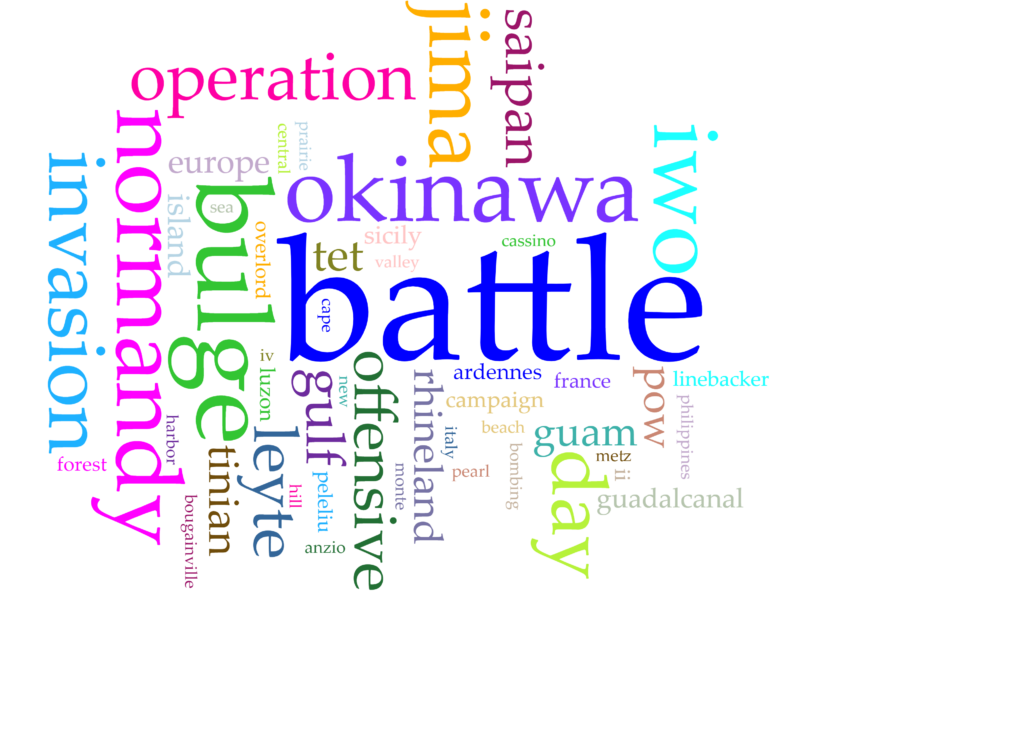

Battles?

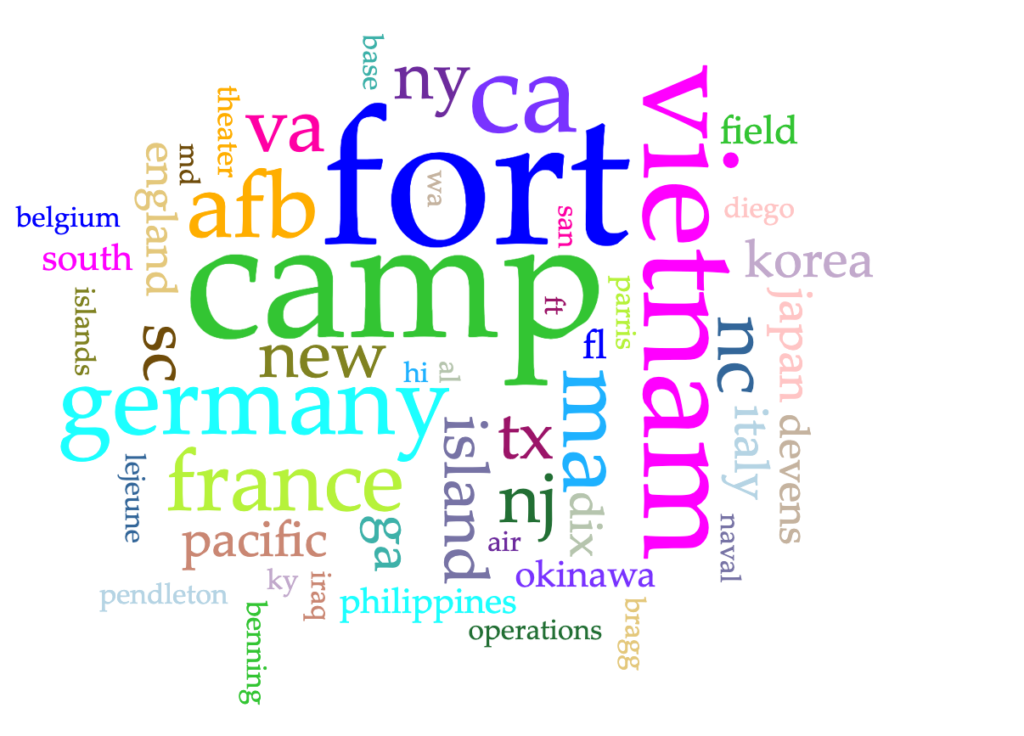

Served in?

The later question is intended to refer to geographic location only, while the former refers exclusively to combat operations. Unfortunately, confusion regarding the distinction often occurred.

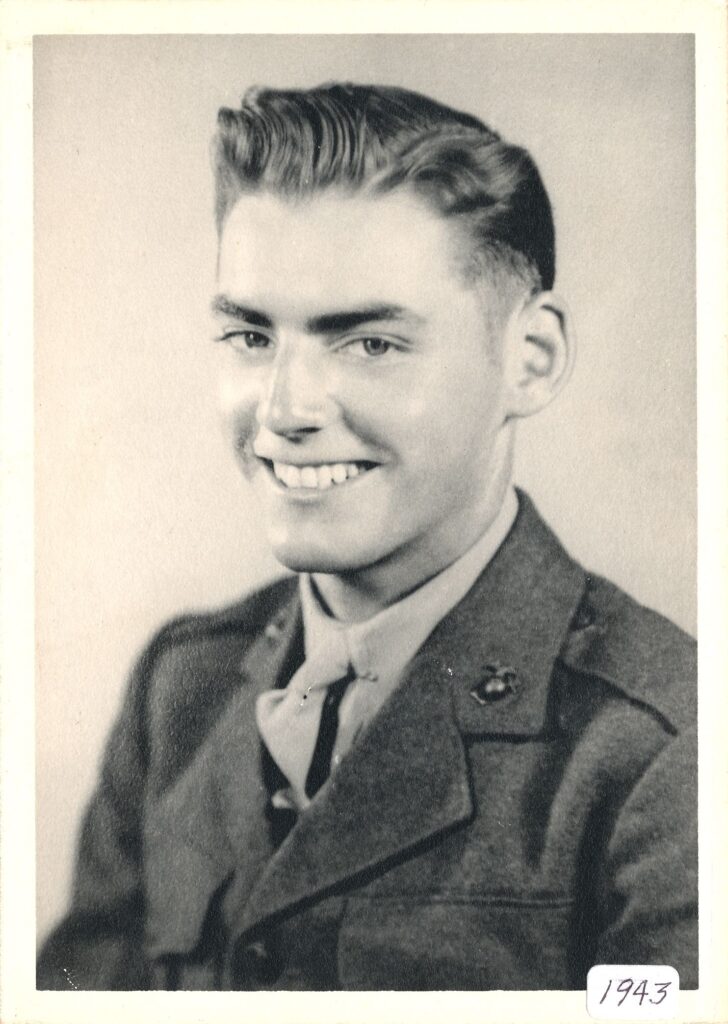

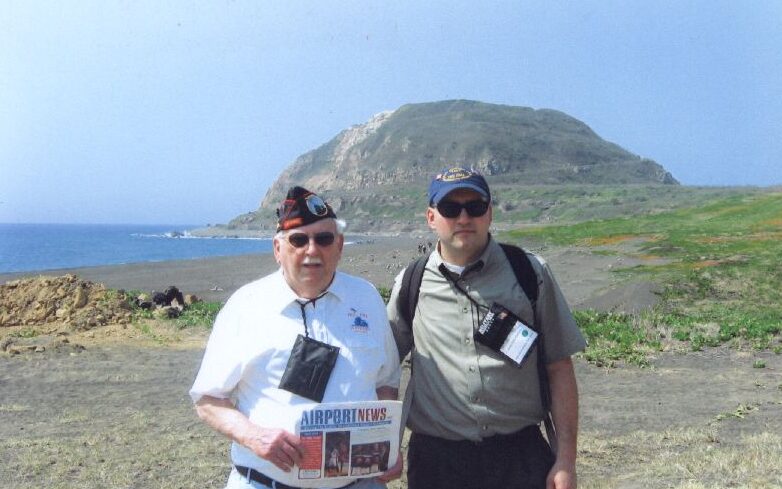

Stanley Dabrowski is one example of a veteran with an inconsistent entry. As a Corpsman, he landed in the 3rd assault wave at Iwo Jima. In his interviews, he describes the horror of that battle in vivid detail.

But his entry lists zero battles. When you enter “Iwo Jima” in the Battle field, you won’t find Dabrowski. Instead, Iwo Jima is noted under his Served In field, alongside places like Camp Pendleton, CA and Hilo, HI.

Entries like this pose a major challenge to archivists. We have to locate inaccurate entries before verifying and correcting them individually. No small task with a database approaching 800 entries!

Working With What You Have

Despite these inconsistencies, the data in these fields can still be utilized. We just have to think outside the box.

In this case, our goal is to create an impression of this mostly accurate data. The few errors within the larger data pool won’t register if our analysis occurs on a broad enough scale. And Voyant.org offers the perfect tool for this.

Word clouds create an immediate impression by visualizing the frequency with which different word occur. Visualizing the 50 most frequent words in the entire archive’s response to the “Unit/Ship” question provides a basic impression. Certainly, it’s clear that the majority of veterans served as infantry, though the large “USS” implies a large number of unique ship names:

More helpfully, the different clouds for “Battles” and “Served in” show us the differences between these two fields. Military bases are prevalent in one, battles much more so in the other.

These tools are far from perfect. For example, in the cloud for “Battles” note the relative frequency of the word “day.” This is Voyant’s reading of “D-Day”, the operation that saw 156,000 troops landing on the beaches of Normandy.

By default Voyant’s code won’t accept the “-” in “D-Day”, and instead divides the name, then disregards the frequency of the solitary letter “D”. A similar process causes “Iwo Jima” to be split into two words: “Iwo” and “Jima.”

These sort of imprecisions can be frustrating, but they can be remedied. And in the meantime, they serve as a valuable reminder. New and innovative tools can only go as far as the information fed into them. Whether written questionnaires or software for statistical or textual analysis, the tool cannot go further that the raw data. More precise conclusions will require refining both our usage of Voyant and the archival data itself.

Knowing this, we adjust our expectations. Instead of insight, we aim for an impression. And this is where the breadth of the digital humanities toolkit is most appreciated. Despite our doubts about the reliability of individual entries, word clouds such as those made possible by Voyant impress upon us the experiences contained by the Veterans History Project. They are familiar names, seared into American history:

Try exploring the archive for yourself and let us know what you find!

One Comment

Pingback: